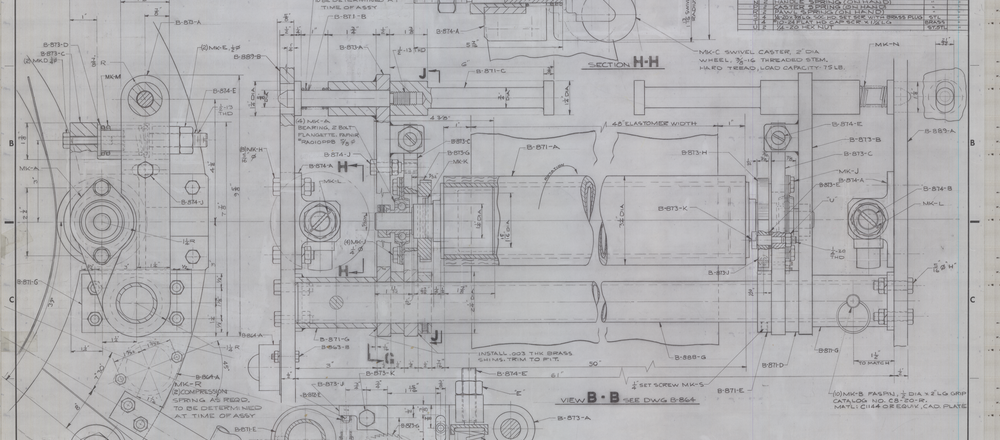

The first GREC Engineering Drawing Challenge was held in 2015. Since then, more than 800 high definition engineering drawings have been digitized and made available to the research community, accounting for approximately 400 GB of unique image data. The collection is available on the Lehigh University DAE server.

The 2015 edition gave rise to a number of challenges and ideas specifically targeted to Graphics Recognition, and some of those can be found here. They are still relevant and open questions, and need to be addressed by the research community.

This year, we are launching a complementary and different challenge …

Transforming (partial) Failure into Opportunity

After the process of digitizing the 800 engineering drawings was finished, a quality inspection of the data showed artifacts induced by the scanning process. These artifacts most definitely come from flaws in the digitization apparatus and may be combination of hardware failures and firmware compensation errors.

Digitization was done on a ColorTrack smartLF Gx+42 at 400dpi by Ingecap. Images are full color lossless TIFF and approximately 15000 x 9000 pixels large for 400MB each. They can be downloaded here.

On the one side, the artifacts are of sufficient importance to produce significant bias in standard image analysis results. As such the resulting overall quality of digitization process can be considered as incompatible with the standards required for high quality reference research data (hence, the “failure“). On the other hand, a close inspection of the nature of the artifacts (their regularity, their repetitive frequency pattern, their non destructive nature …) and the volume of the available data (400 GB) most of which has a more than acceptable level of quality, makes is plausible that some well thought-out post-processing software may be able to filter out these artifacts, and an opportunity to conduct some exciting state-of-the-art benchmarking to see where our research community currently stands with respect to large real-world document image analysis (Is the problem at hand “easy“? Is it “interesting“? Can we effectively handle it?)

Presentation and Preliminary Artifact Analysis

The ColorTrack series are roller scanners with a fixed 1D array of CCDs under which the document is fed and progressively moved by a traction system. This is important to notice, since it allows to make some very strong assumptions about the artifacts.The artifacts look like this (click on pictures below for more detail) and may have very varying intensity (from extremely weak and barely visible to very strong).

|

|

|

We have identified 3 types of artifacts that may probably require different approaches as to their handling and filtering. We presume that their difficulty in handling is probably increasing with the order of presentation below.

- Strong full length artifacts

- Soft full length artifacts

- Partial length artifacts

Because of their supposed origin (hardware or firmware defect on some of the CCD sensors) all artifacts share the properties of being perfectly vertical and consistent in color. The majority of artifacts cover the whole height of the image. A minority of artifacts seem to appear only on a part of given scan lines, and not covering the entire height. You can find a sample full resolution image here.

The Challenge

The challenge consists in finding an efficient way to remove the artifacts by leveraging the specific context of both the data, and the structural properties of the noise itself. This seems to be an unique opportunity to combine and compare state-of-the-art knowledge of the Graphics and broader Document Image Analysis domains to a large scale real-world problem, and to create the conditions for collaboration, exchange and discussion on large scale document image processing.

-

Requirements and Contributions

In order to compete and contribute to the challenge contenders should provide:

- a standalone software solution (executable code, script or network service) that takes a single image as an input and provides a filtered image of the same dimensions and encoding as output and from which the artifacts are removed and replaced by a plausible set of pixels rendering the supposed original image.

- a full description of the techniques and methods applied to achieve the filtering; this description should take the form of of a publishable, or published paper (typeset according to the IEEE templates).

The requirement of a standalone solution is merely a necessity for allowing to asses, compare and evaluate various contributions in a homogeneous and straightforward way, without any possible bias towards parameter tuning.

It should be obvious to the contenders that pretraining and fine-tuning parameters to fit the specific data set can be integrated in the proposed solutions.

-

Evaluation and Assessment

This challenge has the specific property of not having a predetermined formal “Ground-Truth” solution, nor has it the traditional training, testing and evaluation subsets. This is making the evaluation and assessment of contributions a challenge in itself.

For evaluation we will be combining two techniques: statistical metrics designed to compute performance evaluation without reference data, as published in [1] and [2], and crowd-sourced peer evaluation.

The latter will consist of random sampling relevant image patches and submitting them to human evaluators (typically the contestants) for assessment under the following form: “Given the original image patch, which one of two randomly selected contributed methods provides better results in removing the artifacts?“. The compiled results over a reasonable, yet significant amount of patches combined with a Condorcet voting tally will allow for ranking all contributing methods.

-

Tentative Timeline and Deadlines

Contestants can join and contribute at any time in the process.

- Phase 1: announcement and launch – GREC+ICDAR 2017

- Phase 2: progress report and intermediate results – DAS 2018

- Phase 3: final results and evaluation – GREC+ICDAR 2019

A dedicated website and related services for assessment and comparison of methods will be set up in due time. Meanwhile you can use the following contact form to address inquiries (or use eng-drawings-contest@loria.fr).